Table of Contents

Test multiple NVMe namespaces

NVMe specification provides a “Namespace” feature which can split a physical NVMe device into some logical devices to mange them like independent NVMe devices.

The feature is similar in disk partitioning, but this splitting is handled under a device firmware layer. The big difference between them is that each namespace has no specific order in storing position and a system recognise them as individual device. It looks like RAID's virtual drives or ZFS's datasets instead.

It is said that we get following effects using multiple namespaces:

- Improve security with different encryption keys per namespace.

- Increase device endurance and write performance by over-provisioning.

- Write-protect for recovery area and others.

This is an optional feature, so unfortunately most NVMe SSD products, especially for consumer's , don't support it. It's so interesting if it works. I'm hopeful that disk management gets simpler with multiple namespaces in back-and-forth environment between virtual and physical. (My system shares Proxmox VE for a physical host and partitions for a VM by raw device mapping on the same SSD. It's really bothering. If they are split into each namespace, it may be good.)

Setup

- Hardware

- Lenovo ThinkServer TS150

- Supermicro X10SRL-F

- SAMSUNG PM9A3 (M.2/U.2)

- Supports 32 namespaces.

- Software

- Ubuntu Server 22.04.2 LTS

As a FreBSD lover, I'd like to use a nvmecontrol command for this test, though, it is impossible to create namespaces because of a bug.

I have no choice but to use Linux.

FYI: The bug will be fixed in FreeBSD 13.2-RELEASE.

Check current status

First, check current NVMe SSD status.

Check a target device:

$ sudo nvme list Node SN Model Namespace Usage Format FW Rev --------------------- ------- ---------------------------------------- --------- -------------------------- ---------------- -------- /dev/nvme0n1 Serial SAMSUNG MZ1L21T9HCLS-00A07 1 0.00 B / 1.92 TB 512 B + 0 B GDC7302Q

Check usable LBA formats:

$ sudo nvme id-ns -H /dev/nvme0n1 | grep ^LBA LBA Format 0 : Metadata Size: 0 bytes - Data Size: 512 bytes - Relative Performance: 0 Best (in use) LBA Format 1 : Metadata Size: 0 bytes - Data Size: 4096 bytes - Relative Performance: 0 Best

I recommend taking a note of the list to use them later because it seems that we can't get them without any namespaces. All we have to do is make a temporary namespace and get the list when it comes down to it, but I'd like to get off double work.

Check NVMe controllers:

$ sudo nvme list-ctrl /dev/nvme0 num of ctrls present: 1 [ 0]:0x6

NVMe devices may have a couple of controllers. All namespaces must be assigned to one or more controllers. Also take a note their IDs to use later.

Check current details of the controller:

$ sudo nvme id-ctrl /dev/nvme0 | grep -E 'nn|nvmcap' tnvmcap : 1920383410176 unvmcap : 0 nn : 32

tnvmcap is “Total NVM Capacity”, and unvmcap is “Unallocated NVM Capacity”. Their units are byte.

nn is “Number of Namespaces” which is an essential property in this article and means available namespace counts of the device. If it is 1, get nothing.

These information turns out that this SSD can handle maximum 32 namespaces, but there is no chance that it adds any more namespace because of no capacity left.

Remove namespaces

Run nvme delete-ns command to remove an existing namespace.

Needless to say, all data stored in the removed namespace will be gone without any confirmation prompt. I think it is impossible to recover the data by any general approaches because management information under the device firmware layer is cleared. BE CAREFUL!

$ sudo nvme delete-ns /dev/nvme0 -n 1 delete-ns: Success, deleted nsid:1

Then, unvmcap is increased.

$ sudo nvme id-ctrl /dev/nvme0 | grep nvmcap tnvmcap : 1920383410176 unvmcap : 1920383410176

Add namespaces

Any moment now, create new namespaces.

$ sudo nvme create-ns /dev/nvme0 -f 0 -s 209715200 -c 209715200 create-ns: Success, created nsid:1

Options meaning are below:

| Options | Meaning |

|---|---|

-f, –flbas | Specify a LBA format. Value is in list of nvme id-ns results. Use “512-byte sector” in this case. |

-s, –nsze | Specify namespace size by block counts. The block unit size stands on '-f' option. Use 209715200 (=100GiB/512-byte) in this case. |

-c, –ncap | Specify namespace capacity by block counts. The value must be equal to or less than '-s' specified value. If it is less than “nsze”, the namespace will get over-provisioning. |

Added namespace will be available when it is attached to the controller.

$ sudo nvme attach-ns /dev/nvme0 -n 1 -c 0x6 attach-ns: Success, nsid:1

Add one more namespace in the same way.

$ sudo nvme create-ns /dev/nvme0 -s 209715200 -c 209715200 -f 0 create-ns: Success, created nsid:2 $ sudo nvme attach-ns /dev/nvme0 -n 2 -c 0x6 attach-ns: Success, nsid:2

It turns out the system recognises them as nvme0n1 and nvme0n2.

$ sudo nvme list Node SN Model Namespace Usage Format FW Rev --------------------- ------- ---------------------------------------- --------- -------------------------- ---------------- -------- /dev/nvme0n1 Serial SAMSUNG MZ1L21T9HCLS-00A07 1 107.37 GB / 107.37 GB 512 B + 0 B GDC7302Q /dev/nvme0n2 Serial SAMSUNG MZ1L21T9HCLS-00A07 2 107.37 GB / 107.37 GB 512 B + 0 B GDC7302Q $ lsblk | grep nvme0 nvme0n1 259:0 0 100G 0 disk nvme0n2 259:1 0 100G 0 disk

Each namespace's S.M.A.R.T is below. It seems they can have individual S.M.A.R.T. if the device supports it.

| nvme0n1 | nvme0n2 |

|---|---|

$ sudo smartctl -a /dev/nvme0n1 smartctl 7.2 2020-12-30 r5155 [x86_64-linux-5.15.0-60-generic] (local build) Copyright (C) 2002-20, Bruce Allen, Christian Franke, www.smartmontools.org === START OF INFORMATION SECTION === Model Number: SAMSUNG MZ1L21T9HCLS-00A07 Serial Number: S666NE0Rxxxxxx Firmware Version: GDC7302Q PCI Vendor/Subsystem ID: 0x144d IEEE OUI Identifier: 0x002538 Total NVM Capacity: 1,920,383,410,176 [1.92 TB] Unallocated NVM Capacity: 1,705,635,045,376 [1.70 TB] Controller ID: 6 NVMe Version: 1.4 Number of Namespaces: 32 Namespace 1 Size/Capacity: 107,374,182,400 [107 GB] Namespace 1 Formatted LBA Size: 512 Local Time is: Fri May 5 10:11:28 2023 UTC Firmware Updates (0x17): 3 Slots, Slot 1 R/O, no Reset required Optional Admin Commands (0x005f): Security Format Frmw_DL NS_Mngmt Self_Test MI_Snd/Rec Optional NVM Commands (0x005f): Comp Wr_Unc DS_Mngmt Wr_Zero Sav/Sel_Feat Timestmp Log Page Attributes (0x0e): Cmd_Eff_Lg Ext_Get_Lg Telmtry_Lg Maximum Data Transfer Size: 512 Pages Warning Comp. Temp. Threshold: 77 Celsius Critical Comp. Temp. Threshold: 85 Celsius Namespace 1 Features (0x1a): NA_Fields No_ID_Reuse NP_Fields Supported Power States St Op Max Active Idle RL RT WL WT Ent_Lat Ex_Lat 0 + 8.25W 8.25W - 0 0 0 0 70 70 Supported LBA Sizes (NSID 0x1) Id Fmt Data Metadt Rel_Perf 0 + 512 0 0 1 - 4096 0 0 === START OF SMART DATA SECTION === SMART overall-health self-assessment test result: PASSED SMART/Health Information (NVMe Log 0x02) Critical Warning: 0x00 Temperature: 51 Celsius Available Spare: 100% Available Spare Threshold: 10% Percentage Used: 13% Data Units Read: 3,211,446,954 [1.64 PB] Data Units Written: 3,198,599,733 [1.63 PB] Host Read Commands: 7,123,174,983 Host Write Commands: 3,057,480,670 Controller Busy Time: 35,138 Power Cycles: 183 Power On Hours: 8,877 Unsafe Shutdowns: 106 Media and Data Integrity Errors: 0 Error Information Log Entries: 14 Warning Comp. Temperature Time: 0 Critical Comp. Temperature Time: 0 Temperature Sensor 1: 51 Celsius Temperature Sensor 2: 80 Celsius Error Information (NVMe Log 0x01, 16 of 64 entries) Num ErrCount SQId CmdId Status PELoc LBA NSID VS 0 14 0 0xb007 0x4238 0x000 0 1 - 1 13 0 0x8007 0x4238 0x000 0 1 - 2 12 0 0x8006 0x4238 0x000 0 1 - 3 11 0 0x7007 0x422a 0x000 0 0 - | $ sudo smartctl -a /dev/nvme0n2 smartctl 7.2 2020-12-30 r5155 [x86_64-linux-5.15.0-60-generic] (local build) Copyright (C) 2002-20, Bruce Allen, Christian Franke, www.smartmontools.org === START OF INFORMATION SECTION === Model Number: SAMSUNG MZ1L21T9HCLS-00A07 Serial Number: S666NE0Rxxxxxx Firmware Version: GDC7302Q PCI Vendor/Subsystem ID: 0x144d IEEE OUI Identifier: 0x002538 Total NVM Capacity: 1,920,383,410,176 [1.92 TB] Unallocated NVM Capacity: 1,705,635,045,376 [1.70 TB] Controller ID: 6 NVMe Version: 1.4 Number of Namespaces: 32 Namespace 2 Size/Capacity: 107,374,182,400 [107 GB] Namespace 2 Formatted LBA Size: 512 Local Time is: Fri May 5 10:12:01 2023 UTC Firmware Updates (0x17): 3 Slots, Slot 1 R/O, no Reset required Optional Admin Commands (0x005f): Security Format Frmw_DL NS_Mngmt Self_Test MI_Snd/Rec Optional NVM Commands (0x005f): Comp Wr_Unc DS_Mngmt Wr_Zero Sav/Sel_Feat Timestmp Log Page Attributes (0x0e): Cmd_Eff_Lg Ext_Get_Lg Telmtry_Lg Maximum Data Transfer Size: 512 Pages Warning Comp. Temp. Threshold: 77 Celsius Critical Comp. Temp. Threshold: 85 Celsius Namespace 2 Features (0x1a): NA_Fields No_ID_Reuse NP_Fields Supported Power States St Op Max Active Idle RL RT WL WT Ent_Lat Ex_Lat 0 + 8.25W 8.25W - 0 0 0 0 70 70 Supported LBA Sizes (NSID 0x2) Id Fmt Data Metadt Rel_Perf 0 + 512 0 0 1 - 4096 0 0 === START OF SMART DATA SECTION === SMART overall-health self-assessment test result: PASSED SMART/Health Information (NVMe Log 0x02) Critical Warning: 0x00 Temperature: 51 Celsius Available Spare: 100% Available Spare Threshold: 10% Percentage Used: 13% Data Units Read: 3,211,446,954 [1.64 PB] Data Units Written: 3,198,599,733 [1.63 PB] Host Read Commands: 7,123,174,983 Host Write Commands: 3,057,480,670 Controller Busy Time: 35,138 Power Cycles: 183 Power On Hours: 8,877 Unsafe Shutdowns: 106 Media and Data Integrity Errors: 0 Error Information Log Entries: 14 Warning Comp. Temperature Time: 0 Critical Comp. Temperature Time: 0 Temperature Sensor 1: 51 Celsius Temperature Sensor 2: 80 Celsius Error Information (NVMe Log 0x01, 16 of 64 entries) Num ErrCount SQId CmdId Status PELoc LBA NSID VS 0 14 0 0xb007 0x4238 0x000 0 1 - 1 13 0 0x8007 0x4238 0x000 0 1 - 2 12 0 0x8006 0x4238 0x000 0 1 - 3 11 0 0x7007 0x422a 0x000 0 0 - |

How namespaces looks on UEFI or OS

So actually let's get down to business.

What NVMe devices can have multiple namespaces is good thing, but how are they especially after the second recognised by UEFI (BIOS) or OS? The NVMe namespace is device divide function close to hardware layer, however, physical device as a PCIe device is still nothing but only one. I think software support by like a NVMe driver of a host system is one of important parts to work with the function. It is a worrisome whether to support multiple namespaces or not especially on UEFI.

It's wonderful if the system can boot from arbitrary namespace, but there is no information on the net, so I actually check it out.

Linux

On the Linux, the namespaces are shown up as individual devices like /dev/nvme0n1, /dev/nvme0n2 and so on as we have seen.

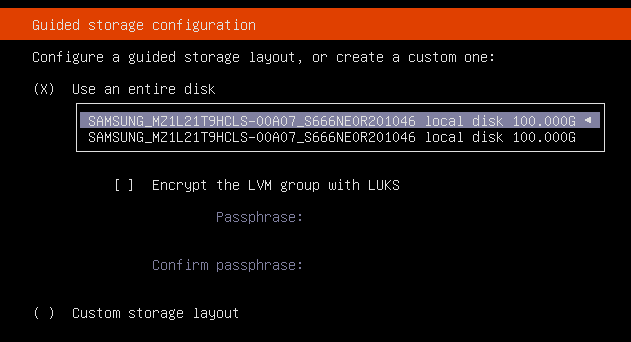

Ubuntu installer can chose them as install destination, then it is possible to install to an any selected namespace.

FreeBSD

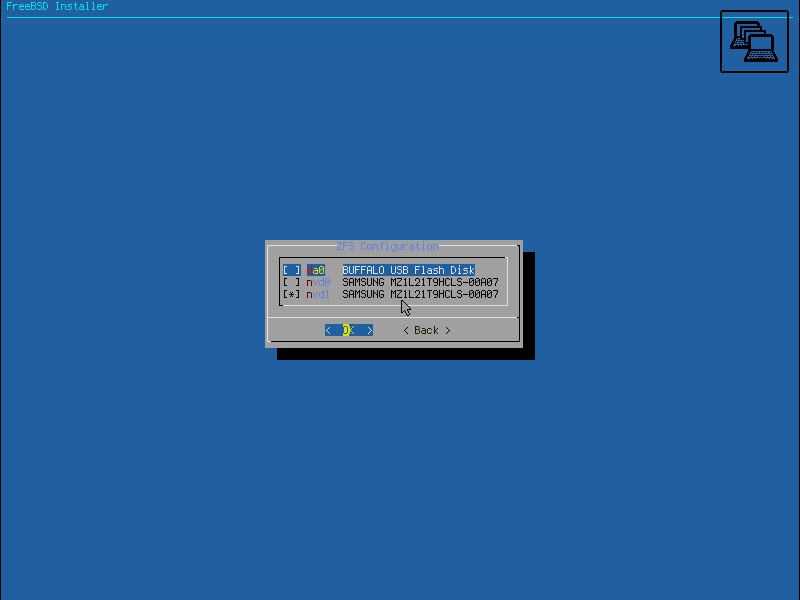

On the FreeBSD, they are like /dev/nvme0ns1 and /dev/nvme0ns2 in the same way. Furthermore, pair devices like /dev/nvd0 and /dev/nvd1 are grown up as general disk-layer devices.

It is possible to chose an any namespace for installation in this case.

Windows

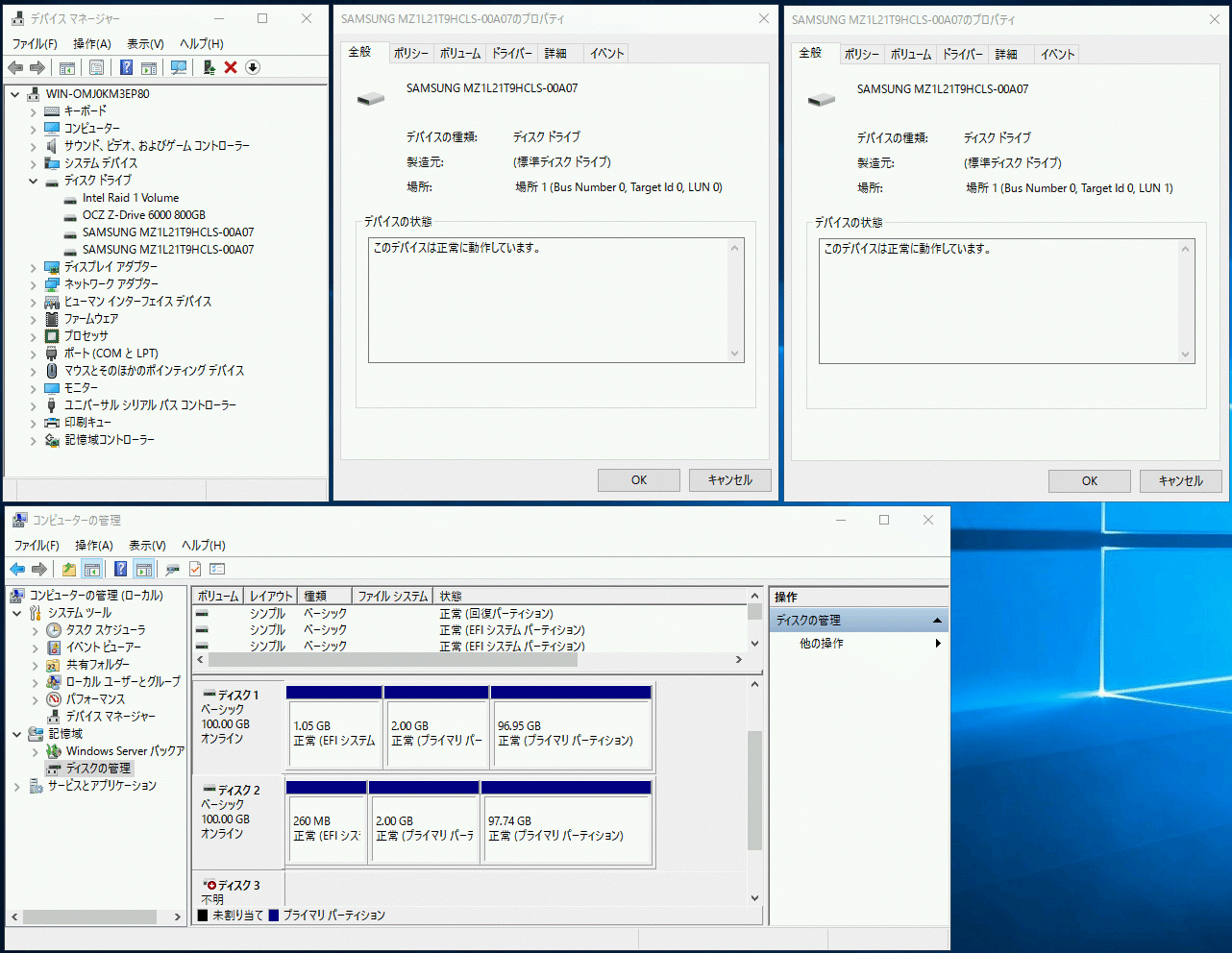

On the Windows, they are detected as different LUN devices.

Its installer recognises them as individual drive, then it seems to be able to install to the selected drive.

UEFI (BIOS)

On the UEFI, I think multiple NVMe namespace support may vary depending on motherboard products.

ThinkServer TS150

Lenovo ThinkServer TS150 has no M.2/U.2 slots which was launched around 2016. The PC supports NVMe devices via PCIe slots, but it appears to handle the first namespace as a boot device on its UEFI (or else, it gives priority to the first UEFI boot entry perhaps.)

In this case, the SSD has 2 namespaces which the first one is Ubuntu and the second one is FreeBSD.

The TS150's boot entry is below. It seems that both namespaces and the operating systems are correctly registered.

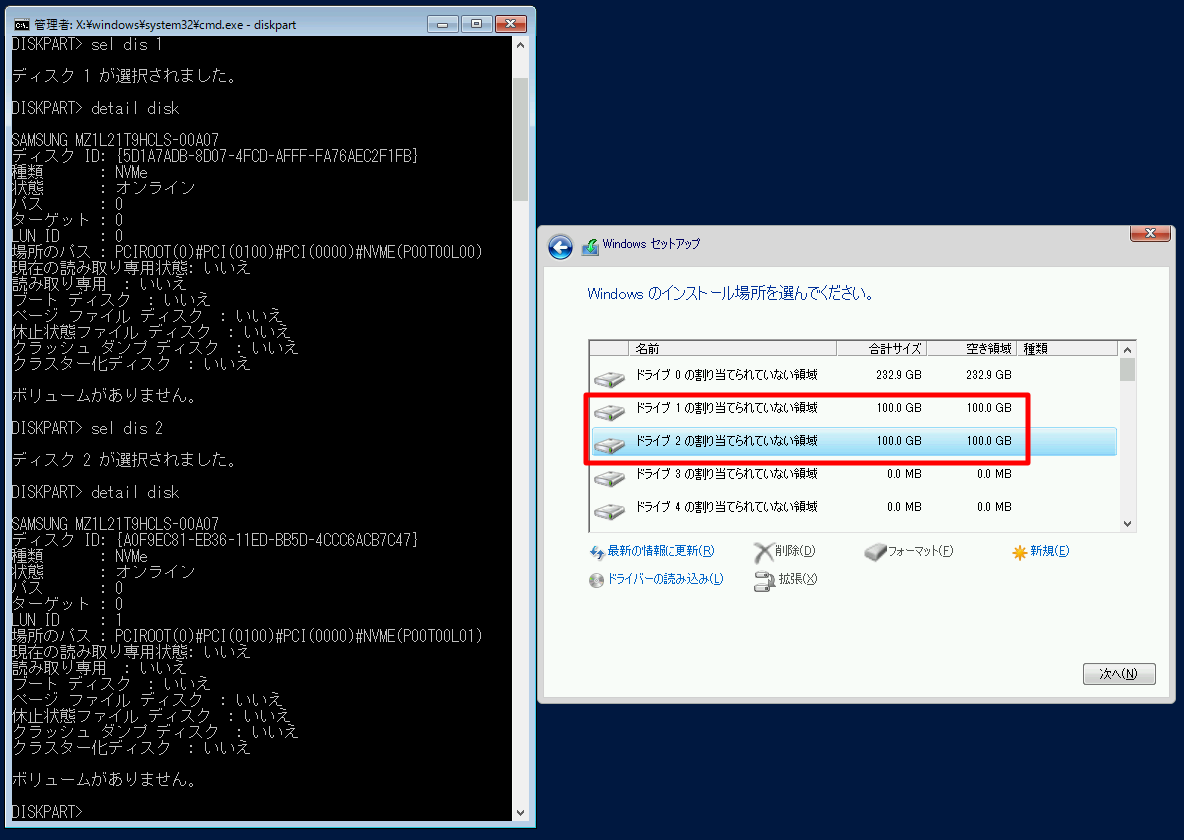

$ efibootmgr -v BootCurrent: 0000 Timeout: 1 seconds BootOrder: 0000,0002,0003 Boot0000* ubuntu HD(1,GPT,0066403c-e597-4cfa-aa56-ad2f79960c92,0x800,0x219800)/File(\EFI\UBUNTU\SHIMX64.EFI) Boot0002* FreeBSD HD(1,GPT,a0fad4cc-eb36-11ed-bb5d-4ccc6acb7c47,0x28,0x82000)/File(\EFI\FREEBSD\LOADER.EFI) Boot0003* UEFI OS HD(1,GPT,a0fad4cc-eb36-11ed-bb5d-4ccc6acb7c47,0x28,0x82000)/File(\EFI\BOOT\BOOTX64.EFI)..BO $ sudo blkid /dev/nvme0n1p1: UUID="8030-A0AE" BLOCK_SIZE="512" TYPE="vfat" PARTUUID="0066403c-e597-4cfa-aa56-ad2f79960c92" /dev/nvme0n2p1: SEC_TYPE="msdos" UUID="C23B-12F9" BLOCK_SIZE="512" TYPE="vfat" PARTLABEL="efiboot0" PARTUUID="a0fad4cc-eb36-11ed-bb5d-4ccc6acb7c47"

Run efibootmgr -n 0003 command here to set next boot to FreeBSD.

While then FreeBSD will be surely started up next time, the recognised boot device on the UEFI has been still “Ubuntu.”

However it seems efibootmgr -o 0003,0002,0000 command which is to set boot order to “UEFI OS” (this is FreeBSD's boot loader), “FreeBSD”, “ubuntu” is no effect because Ubuntu is started up as ever.

This behaviour is slightly weird, but it turns out it is possible to boot the system from a chosen namespace from multiple ones. If you want to constantly change the boot OS from them, it is a good idea to install a boot manager such as rEFInd or CloverBootloader into namespace 1.

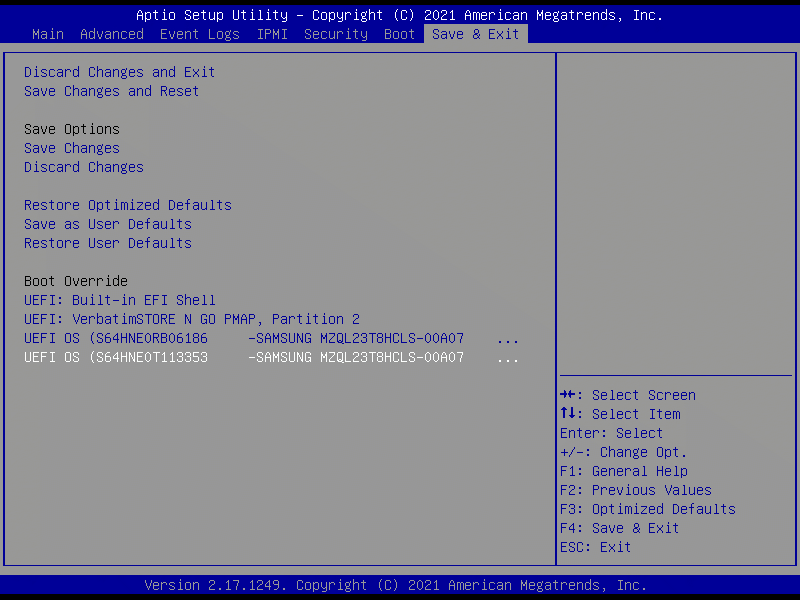

Supermicro X10SRL-F

Supermicro X10SRL-F mobo appears to be able to boot from any namespaces if they have a valid ESP.

Both items are hard to understand because of the same name “UEFI OS”, but the first is FreeBSD boot loader located on ESP of namespace 1, then the second is Proxmox VE boot loader that is GRUB on ESP of namespace 2. Boot order has given priority to the second “UEFI OS”, thus the Proxmox VE is launched as expected. That's impressive!