ZFS can't remove special vdevs from RAIDZ pools

It seems that ZFS cannot remove special vdevs from any kind of RAIDZ pools as of OpenZFS v2.1.4. This behaviour is believed to be a specification being caused by a limitation of top-level vdev removal, I guess.

Suppose the pool which consists of a RAID-Z1 vdev and a mirrored special vdev exists.

# zpool status ztank

pool: ztank

state: ONLINE

config:

NAME STATE READ WRITE CKSUM

zdata ONLINE 0 0 0

raidz1-0 ONLINE 0 0 0

da1 ONLINE 0 0 0

da2 ONLINE 0 0 0

da3 ONLINE 0 0 0

special

mirror-1 ONLINE 0 0 0

da4 ONLINE 0 0 0

da5 ONLINE 0 0 0

errors: No known data errors

Now, run zpool remove command to remove the special vdev, and an error occurred like below.

# zpool remove ztank mirror-1 cannot remove mirror-1: invalid config; all top-level vdevs must have the same sector size and not be raidz.

I think that removing top-level vdev “mirror-1” all at once is not good, then try to remove each member of the vdev, but no sense.

# zpool detach ztank da5 # zpool remove ztank da4 cannot remove .....

By the way, it is possible to remove slog or L2ARC which is one of top-level vdev kinds from any RAIDZ pools for some time, and besides, non-RAIDZ pools have no limitation to remove top-level vdevs.

I have been preparing to apply a special vdev to my RAIDZ2 pool on real part, but it is a little hard to do it. It was good I tried these beforehand on a VM… L2ARCs and slogs are removal from any RAIDZ pools so special vdevs will become possible to do in a future or else it won't because the special vdev removal have to be strictly evacuated data in the vdev to main vdev?

I will have no choice but to alternatively use p2L2ARC for the time being.

1.5 Peta-Byte written PM9A3 doesn't work with PS5

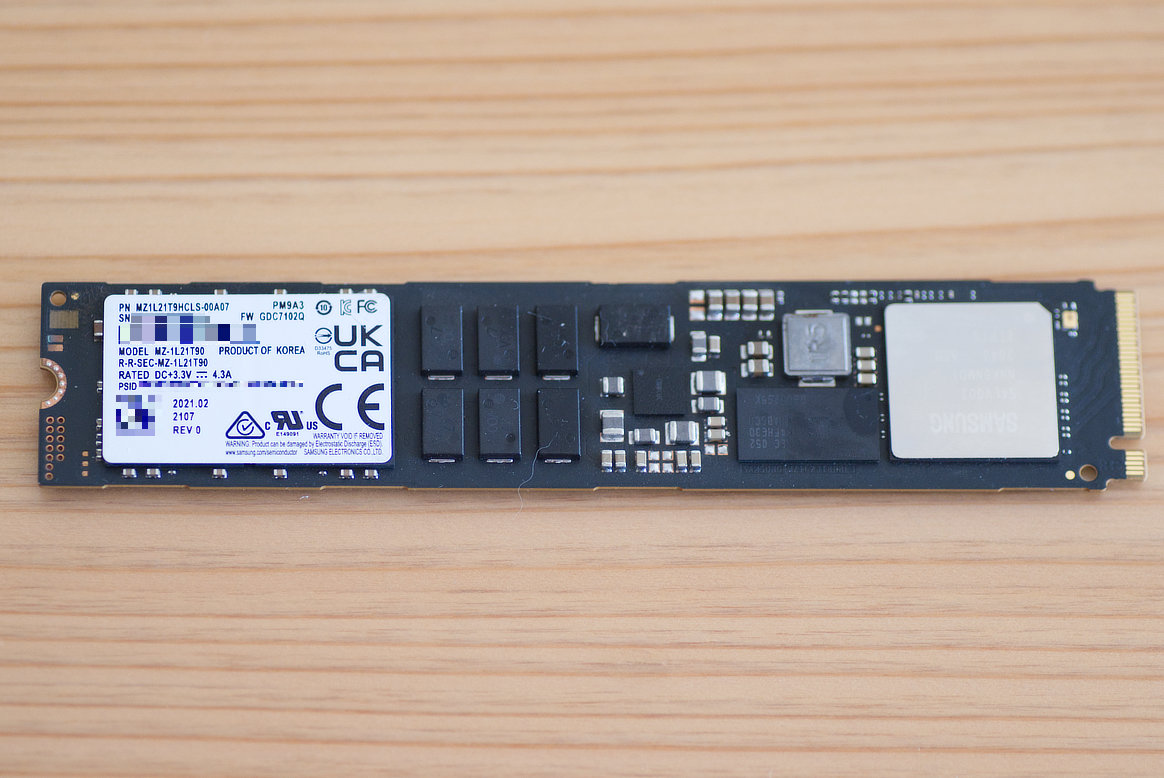

I found that a Samsung's PCIe 4.0-gen SSD PM9A3 1.92TB (MZ1L21T9HCLS-00A07) was sold for just a little over 20000 JPY in a certain place. The SSD is read and written 1520465GB = 1.5PB, so it's a slightly fishy stuff, but the price is quite low as PCIe Gen4 2TB NVMe SSD. Its endurance is promoted as 1 DWPD which is equivalent to 3540 TBW for enterprise. This means its lifetime still remains more than half according to the spec though 1.5PB written. I thought the SSD would just fit to PS5 and got it.

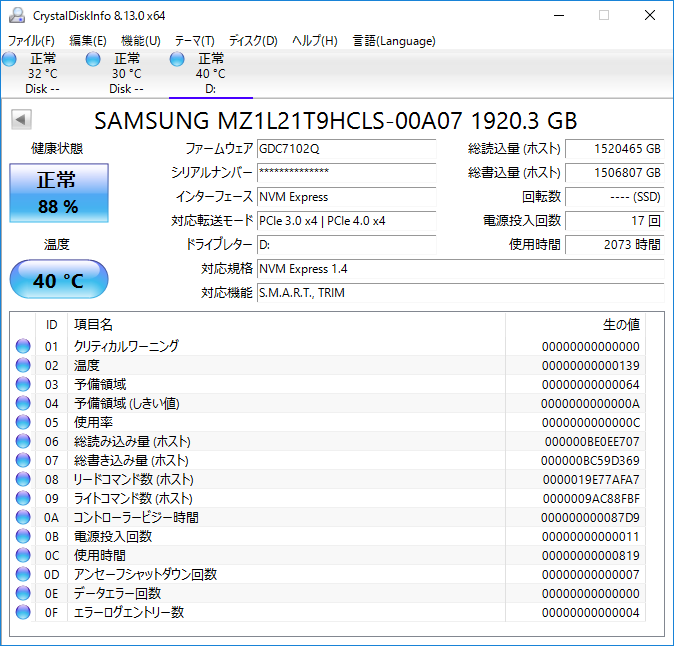

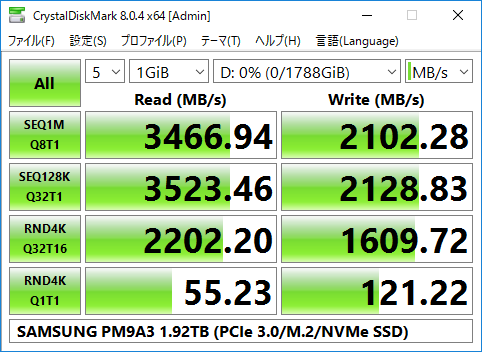

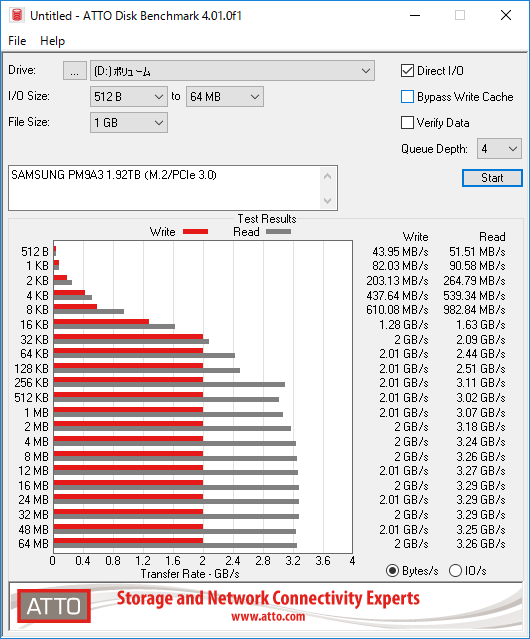

S.M.A.R.T. information and benchmarks show below as always.

“Start Stop Count” and “Power On Hours” are too low for read/written bytes. I guess it may be leaked stuff for an endurance test. The remaining lifetime 88% per 1.5PB written means that the theoretical TBW gets 12.5PB.

Benchmark results are on PCIe 3.0 environment. I haven't gotten any PCIe 4.0 machines yet.

In benchmarking, the SSD heats up to 80 degree Celsius reading from S.M.A.R.T. It should bear cooling in mind.

And now, as the point of interest to work with PS5, it doesn't…

When power on my PS5 attaching PM9A3, screen is completely black and BD drive spins up and down repeatedly. It seems that it repeats to be reset and reboot while booting.

I tried to attach another PCIe 3.0 SSD, then my PS5 showed the “Can't use the M.2 SSD inserted in the expansion slot.” screen up, so it is good. It tells me that PM9A3 can't work with PS5 for some reason somehow as of now. It's too bad.

Hmmm, what should I treats the iffy SSD…

VirtIO RNG may cause high CPU utilization by rand_harvestq in FreeBSD 13 VM

I found my home server suddenly got increased power consumption. Its difference was up to 30W, so it's not obviously error.

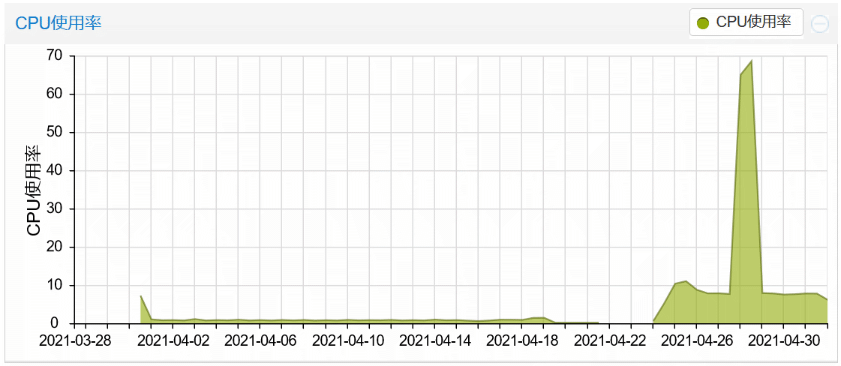

It was caused by powering up FreeBSD VM on ProxmoxVE, but I had no reasonable reasons. When I casually saw the PVE's CPU utilization graph, I found it increased considerably after upgrading to 13.0-RELEASE.

Although I checked FreeBSD top result, there were nothing significant highly loaded processes, but it showed “System” used 4-5% CPU on closer look.

It meant some sort of system processes ate a CPU.

And I checked details with top -SP, then a rand_harvestq process constantly ate 40-80% of one of CPU cores.

The process appears for harvesting entropies of random number from its name. Show my article about the entropy harvesting.

There are no weird points at system variables relevant to the harvesting. If I was to say it, using two random sources which are 'VirtIO Entropy Adapter'(VirtIO RND) and 'Intel Secure Key RNG'(RDRAND) is specific to the system running on a virtual machine.

$ sysctl kern.random kern.random.fortuna.concurrent_read: 1 kern.random.fortuna.minpoolsize: 64 kern.random.rdrand.rdrand_independent_seed: 0 kern.random.use_chacha20_cipher: 1 kern.random.block_seeded_status: 0 kern.random.random_sources: 'VirtIO Entropy Adapter','Intel Secure Key RNG' kern.random.harvest.mask_symbolic: VMGENID,PURE_VIRTIO,PURE_RDRAND,[UMA],[FS_ATIME],SWI,INTERRUPT,NET_NG,[NET_ETHER],NET_TUN,MOUSE,KEYBOARD,ATTACH,CACHED kern.random.harvest.mask_bin: 100001001000000111011111 kern.random.harvest.mask: 8683999 kern.random.initial_seeding.disable_bypass_warnings: 0 kern.random.initial_seeding.arc4random_bypassed_before_seeding: 0 kern.random.initial_seeding.read_random_bypassed_before_seeding: 0 kern.random.initial_seeding.bypass_before_seeding: 1

And then, the reason for the high CPU utilization was, after all, VirtIO Entropy Adapter.

It is a para-virtualization device for a random number device as it is named to use its physical device on a host from a VM.

It is expected to use lower CPU if we use common sense, but it's not so in this case.

I don't know why, though.

The VirtIO RNG's random source is assigned /dev/urandom on the host, so it may not be blocking.

I haven't changed these settings at all..hummm…why?

I decided to use the VM without the VirtIO RNG because getting high-loaded by para-virtualization is putting the cart before the horse. Intel Secure Key RNG still works as the random source in FreeBSD, so it'll be no problem.

The power consumption returned to former level, then my wallet stays away from losing money.

ConnectX-3 VF finally works in Windows guest on PVE!

I finally succeed in working a virtual function of ConnectX-3 SR-IOV on a Windows 10 Pro. guest on Proxmox VE 6.3!

Getting the device manager to recognise the VF was a piece of cake, but I struggled with an “Error code 43” problem even though I tried to install its driver over and over. As it turned out, the cause was that PVE's built-in PF driver was outdated indeed. I will write it down at a later date if time permits.

Let's just see a performance of SR-IOV!

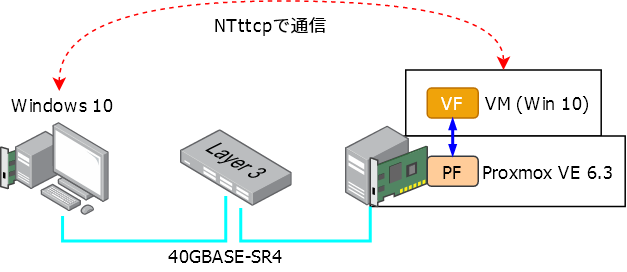

As shown in above picture, two Windows 10 PCs were connected by ConnectX-3 via a Layer-3 switch, one of which was physical machine (left-side in the picture) and the another was virtual machine (right-side). Then I measured line speed using NTttcp which was a Microsoft's official tool. Sender was left-side machine, and receiver was right-side. The result is below:

There are four task manager in the picture, but the active task manager in the bottom right corner runs on the physical machine, the others do on the virtual machine. It turns out that the connection speeds up to about 26Gbps. When I tested it using virtio-net instead of the PF on the VM, it sped up to about 12Gbps in comparable terms. The SR-IOV works out lower CPU load even more than faster speed. Only the SR-IOV could do that!

I also found out it peaked up to about 32Gbps on a side note.

Scrubing degraded ZFS pools can't be imported by another systems?

It's a long story, I imported a RAIDZ pool which consisted of 4 HDDs and was created by FreeBSD to a ZoL environment as a degraded pool consisted of 3 HDDs.

Although scrub was automatically running, I cancelled it and exported it, then tried to import the entire 4 HDDs on the BSD system, but it couldn't.

# zpool import zdata cannot import 'zdata': no such pool available

A such error happened. I sweated it because the pool could get corrupted by come-and-go the pool between the BSD and Linux with degraded.

On the ZoL environment, I found the degraded pool was succeeded to import. After the pool got back to 4 HDDs and finished to resilver, it could be imported on the FreeBSD system.

It appears a degraded pool can't be imported by other systems once it is imported by the first system until its reliability will be recovered, I guess. I don't know this behaviour is normal or a special case occurred by come-and-go the pool between FreeBSD (Legacy ZFS) and the ZoL.

If it is right behaviour, the pool virtually will get unavailable when the PC will break up, so I guess it may not be right behaviour. I log this phenomenon as it actually happened.